Research

Japan Science and Technology Agency (JST) CREST:

[Society 5.0 System Software] Creation of System Software for Society 5.0 by Integrating Fundamental Theories and System Platform Technologies

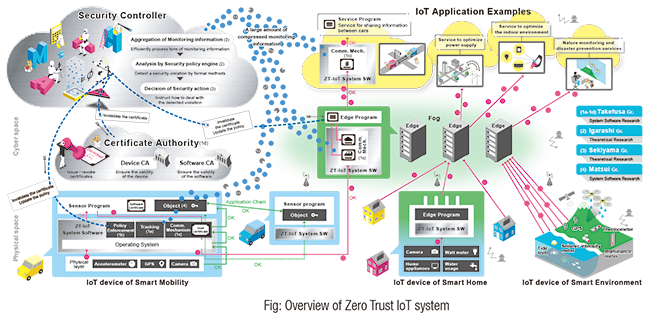

Zero Trust IoT by Formal Verification and System Software

Principal investigator: TAKEFUSA, Atsuko, Professor, Information Systems Architecture Science Research Division

In Society 5.0, sensor data from security cameras, indoor and outdoor environmental sensors, industrial robots, and a wide range of other IoT devices will be collected and stored in clouds. The data will also be processed by AI, which is expected to create new value, e.g., increasing the quality of life, monitoring nature, preventing and mitigating disaster, and making urban environments more efficient. However, such IoT systems are facing a variety of cybersecurity threats. Enormous damage to public infrastructure has been reported in some cases. This study aims to realize a secure IoT system in accordance with the concept of Zero Trust (ZT-IoT), by the fusion of theoretical research and system software research. Zero Trust is a cybersecurity design methodology in which computers and data are protected without relying unconditionally on VPNs, firewalls, and other security measures, but protected by continuously monitoring, assessing, and improving security measures. This study enables the development of highly secure Zero Trust-based IoT systems, through the fusion of formal verification and system software technologies. The theoretical research will provide mathematical proofs for validating the trust chain of the IoT system and establish a new formal verification technique that works together with dynamic verification techniques to counter undiscovered threats. The system software research, on the other hand, will realize the security of the ZT-IoT system by developing execution isolation, automatic detection, and automatic countermeasure techniques to support the above trust chain in conjunction with the theoretical findings. Furthermore, the security of ZT-IoT will be assured in an accountable manner, to promote the social acceptance of IoT systems and to contribute to the realization of Society 5.0.

[Trusted quality AI systems]Core technologies for trusted quality AI systems

Research Director: AIZAWA, Akiko (Vice Director-General, NII; Professor, Digital Content and Media Sciences Research Division)

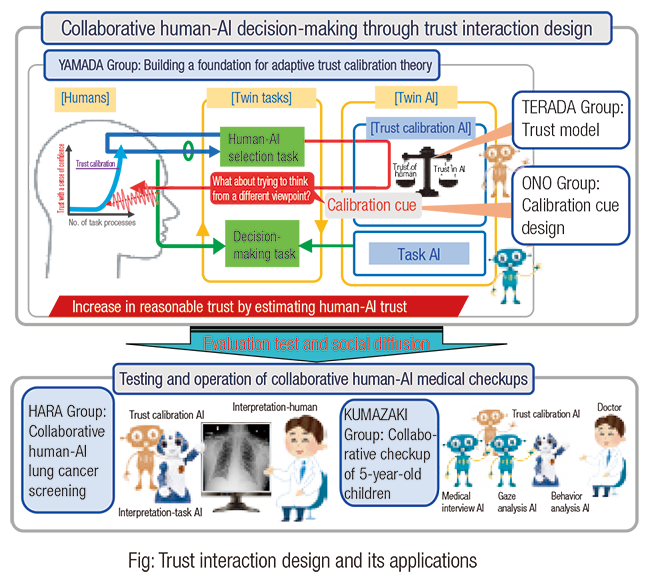

Trust Interaction Design for Convincing Human-AI Cooperative Decision Making and its Social Penetration

Principal Investigator: YAMADA, Seiji, Professor, Digital Content and Media Sciences Research Division

AI systems often make mistakes, due to the algorithms they use, the quality and quantity of data and knowledge, and biases. However, due to their own preconceptions and biases, most end-users are unable to properly assess AI performance, so they tend to either straightforward believe or reject the solutions output by AI. The latter phenomenon, known in cognitive science as "algorithm aversion," is an essential problem in human-AI cooperative decision-making, and solving it is a major challenge for achieving trustworthy AI. Solving this problem and optimizing the performance of human-AI cooperative decision-making cannot be easily achieved by just improving the performance of AI itself, as was done in the past. It is necessary to optimize trust so that AI performance can be accurately estimated through interaction between humans and AI.

In light of this, this project aims to develop a theory of trust interaction design in which AI detects a breakdown in the trust relationship (over-trust or under-trust) based on cognitive biases and values, and adaptively issues calibration cues to prompt trust calibration, thereby optimizing the trust relationship and increasing the sense of confidence. A further aim is to achieve social diffusion of this method through medical checkups.

In human-AI cooperative decision-making, humans repeatedly decide whether to take a decision themselves or leave it to the AI. The trust calibration AI calculates the trust of human and AI based on the trust model and compares the rational choice determined from the trust magnitude relationship and the actual human choice, to detect whether there is over-trust or under-trust. If a trust breakdown is detected, a calibration cue to prompt a trust calibration is indicated. In response to the calibration cue, a human takes the initiative of performing a trust calibration. In this way, humans can build optimal trust with a sense of confidence.

NII Today No.101(EN)

NII Today No.101(EN) SINETStream Use Case: Mobile Animal Laboratory [Bio-Innovation Research Center, Tokushima Univ.]

SINETStream Use Case: Mobile Animal Laboratory [Bio-Innovation Research Center, Tokushima Univ.] NII Today No.100(EN)

NII Today No.100(EN) Overview of NII 2023

Overview of NII 2023 NII Today No.99(EN)

NII Today No.99(EN) Guidance of Informatics Program, SOKENDAI 23-24

Guidance of Informatics Program, SOKENDAI 23-24 NII Today No.98(EN)

NII Today No.98(EN) The National Institute of Information Basic Principles of Respect for LGBTQ

The National Institute of Information Basic Principles of Respect for LGBTQ DAAD

DAAD